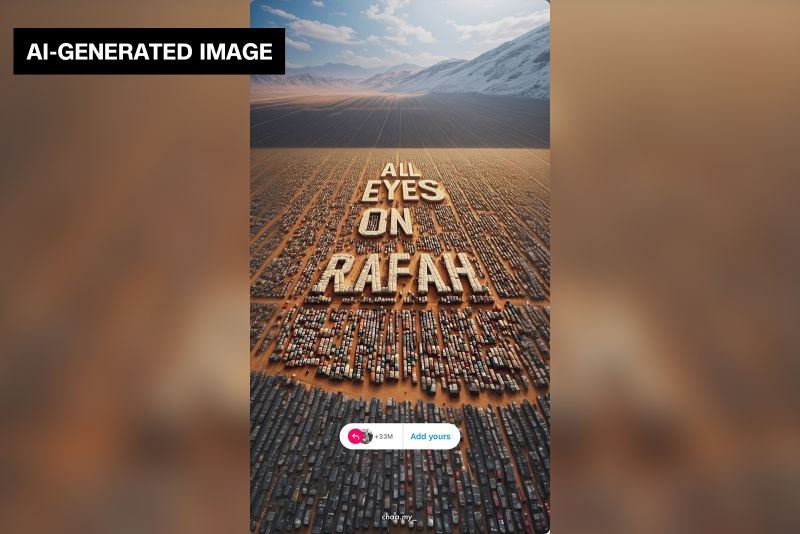

As we delve into the main discussion, we first need to understand the subject Matter. Artificial Intelligence, most commonly known as AI, has revolutionized numerous activities, including generating images. With powerful tools like DeepArt, DeepDream, free tools on mobile applications, and custom-built algorithms, AI can now generate images that could be often misjudged as reality. One of the prominent cases regards a likely AI-generated image of Gaza, which recently made big waves all across the internet.

The image in question showcases a bird’s eye view of Gaza, with dense buildings, broad boulevards, and what appears to be a peaceful setting. It was widely disseminated over various social media platforms, providing a vista remarkably different from the widely depicted war-torn Gaza. The image, while visually appealing, was mysterious upon keen observation, with numerous duplicated elements, slightly distorted shapes, and unnaturally perfect alignment of blocks.

The intrigue nature of AI technology is perpetuated further by how quickly and seamlessly generated images can be mistaken for real images. In the case of the AI-generated Gaza image, netizens were awed as it circulated rapidly through various social media platforms. Many users, unaware of the advanced capabilities of AI, went on to share and repost the image, assuming it to be an accurate representation of the city. As a result, the image gained immense popularity seemingly overnight.

The AI-generated image phenomenon is underscored by its masquerade as real image. Speculatively, the Gaza image was likely produced by Generative Adversarial Networks (GANs), a subset of AI machine-learning methods. GANs work through two competing elements: a generator that creates new samples and a discriminator that determines whether the sample is from the real dataset or produced by the generator. These duelling networks continue learning from each other, improving their capabilities. Such advanced technology can produce results intricate enough to deceive the human eye under casual observation.

Contributing to AI images’ popularity is the fact that these can be tailored to fit any desired narrative – for instance, the Gaza image. Existing photographs of the city, easily accessible online, showcase a starkly different scene: half-destroyed buildings, sprawling refugee camps, and the visible severity of longstanding conflict. The dissemination of the serene, AI-generated Gaza image, thus inadvertently contributes to obscuring the city’s actual conditions. It stands as a reflection of how AI, when used without a critical understanding of its abilities, might promote misinformation.

Strikingly, the AI-generated image phenomenon isn’t confined to Gaza. Various densely populated regions worldwide have been subject to similar artificially created images. The sheer potential and widespread impact of such AI technology has given rise to multiple ethical and practical issues. In the hands of the malicious or unwitting, AI could contribute to promoting harmful illusions that mindlessly feed into echo chambers.

In essence, the AI-generated Gaza image that took over the internet is just the tip of the iceberg. It underlines how far AI technology has grown and the challenges it poses. This calls for comprehensive awareness about AI capabilities and an understanding of their potential impact on societal perceptions.

The Gaza image serves as a potent reminder of the urgent need to apply AI responsibly and with an enlightened understanding of its capabilities. It’s crucial to ensure that AI is used to reflect truth accurately, rather than distort it. As citizens of the digital age, it’s our collective responsibility to stay informed, question what we see and do our bit to prevent the proliferation of misinformation. Thus, a likely AI-generated image paints a much larger picture than just the city of Gaza; it’s a reflection of our inexorably digitized world and signals the urgent need for responsible AI usage.